-

Notifications

You must be signed in to change notification settings - Fork 106

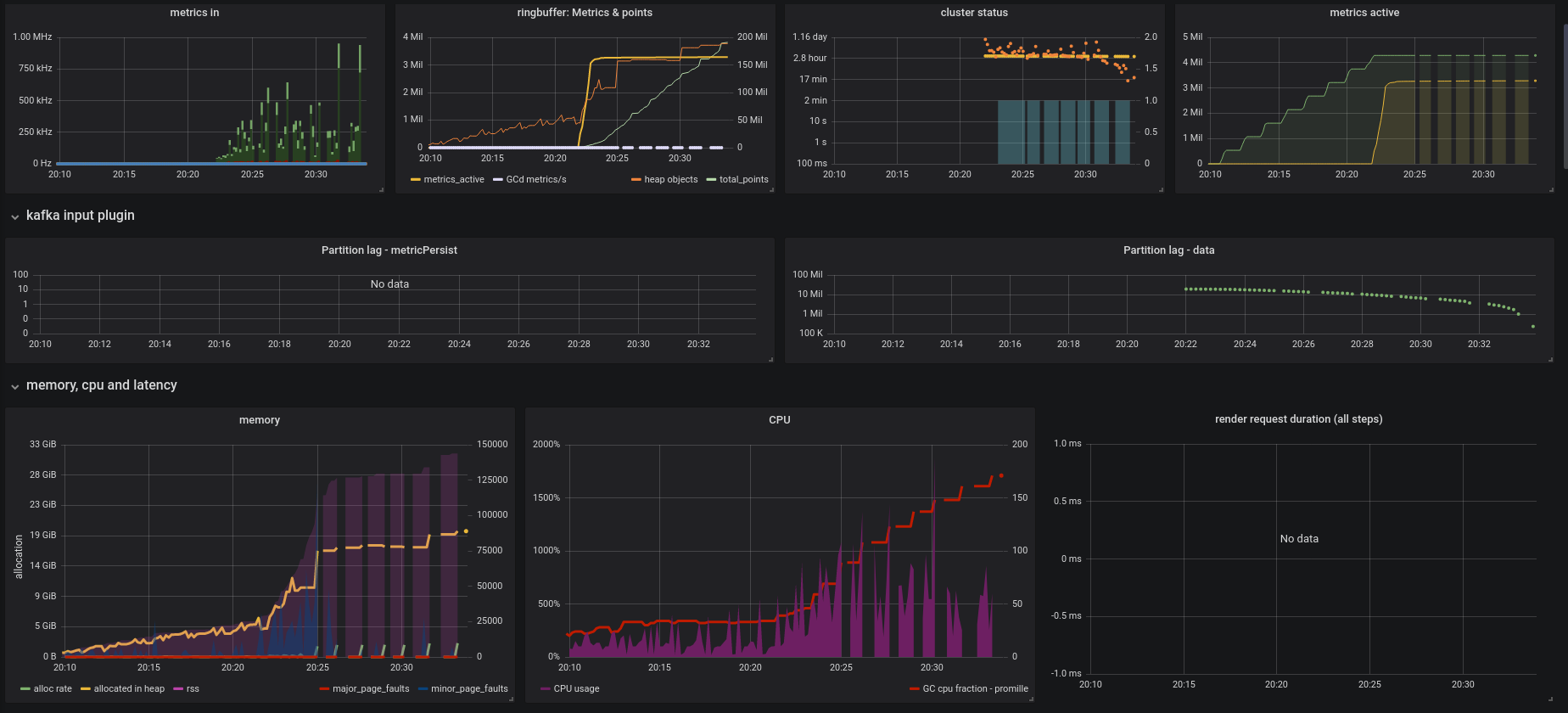

GC resulting in missed stats #1207

Comments

|

This is also very noticeable during startup/backfill. We now have pods that don't send stats for several minutes. awoods [9:46 AM] dieter [9:51 AM] but if we can reproduce this locally, then we can look at a trace to see if that routine has to assist dieter10:26 AM |

|

this could be our problem: |

|

wouldnt it make sense to just have MemoryReporter and ProcessReporter just add metrics to the registry like every thing else. ie, I think it is generally a bad idea to try and "collect" metrics during the flush process. Collecting and flushing should be completely separate processes. |

I think it's a tradeoff. for collect operations that we know to be brief, we can do them "in sync" with the flush, so we always flush the latest stats. |

|

plan of action:

if none of that works, further investigation will be required |

|

The upstream fix for golang/go#19812 was merged in Golang git just a few days after the 1.13 release. |

|

This means the fix is not available until go1.14 is released in Feb 2020.(we can/should not use a non release version of Go) |

Wouldn't it be ok to backport the 1 patch we need to 1.13? |

answer from awoods is no: part of the reason is because we are not equipped to support a patched version of go, especially with regards to users who don't want to run with a patched version of Go |

|

Try to reproduce locally with a docker-compose stack then try to get a go trace. |

|

FWIW a fix for golang/go#19812 has been merged in go. |

|

update: they reverted that fix :o |

|

Golang team is working on another fix now. |

|

new fix seems to be in in Go tip/master |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions. |

The text was updated successfully, but these errors were encountered: