- Cooperating processes may either directly share a logical address space or be allowed to share data through files.

- Concurrent access to shared data may result in data inconsistency:

- Processes are interleaved in time (single processor system)

- Processes are interleaved and overlapped (multi-processor system).

- Consider a simple example of procedure “echo” which takes input from the

keyboard and display it:

- Two processes calling the procedure simultaneously.

- Process Synchronization

- Maintaining data consistency requires mechanisms to ensure the orderly execution of cooperating processes

- Bounded buffer example

- Suppose that we wanted to provide a solution to the consumer-producer problem that fills all the buffers. We can do so by having an integer counter that keeps track of the number of full buffers. Initially, counter is set to 0. It is incremented by the producer after it produces a new buffer and is decremented by the consumer after it consumes a buffer.

- Race condition

- The situation where several processes access and manipulate shared data concurrently.

- The final value of the shared data depends upon which process finishes last.

- To prevent a race condition, concurrent processes must be synchronized.

- Ensure that only one process at a time is manipulating the variable counter.

- The statements (1 line of C code may consist of multiple instructions)

counter++;orcounter--;must be performed atomically. - Atomic operation

- an operation that completes in its entirety without interruption

- Critical Section (CS) Problem

- n processes all competing to use some shared data

- Each process has a code segment, called critical section, in which the shared data is accessed.

- Problem

- How to ensure that when one process is executing in its critical section, no other process is allowed to execute in its critical section.

- Execution of critical sections by the processes is thus mutually exclusive.

- Solutions

- Software, hardware, OS and high-level constructs

- Solution requirements

- Three requirements

- Mutual Exclusion

- If process Pi is executing in its critical section, then no other processes can be executing in their critical sections

- One each time

- Progress

- If no process is executing in its critical section and there exist some processes that wish to enter their critical section, then the selection of the processes that will enter the critical section next cannot be postponed indefinitely

- Decision must be made

- Bounded Waiting

- A bound must exist on the number of times that other processes are allowed to enter their critical sections after a process has made a request to enter its critical section and before that request is granted

- Everyone has a chance to get in eventually

- Assume that each process executes at a nonzero speed

- No assumption concerning relative speed of the N processes

- Three requirements

- CS kernel problem

- A kernel code is subject to several possible race conditions

- Kernel data structure that maintains a list of all open files in the system

- Other structures for maintaining memory allocation, for maintaining process lists, and for interrupt handling

- Non-preemptive kernel – runs until exits kernel mode, blocks, or voluntarily

yields CPU

- Essentially free from race conditions

- Preemptive kernel – allows preemption of process running in kernel mode

- is not free from race conditions

- It allows a process to be preempted while it is running in kernel mode

- Preemptive kernels are used in real-time systems, Linux 2.6 kernel, Solaris

- Two-process solution

- Bakery algorithm

- Basic hardware features

- TestAndSet

- Swap

- Mutual exclusion

- Bounded waiting

-

Synchronization tool that provides more sophisticated ways (than Mutex locks) for process to synchronize their activities.

-

Semaphore S – integer variable

-

Can only be accessed via two indivisible (atomic) operations

wait()andsignal()- Originally called

P()andV()

- Originally called

-

Definition of the wait() operation

wait(S) {

while (S <= 0)

; // busy wait

S--;

}- Definition of the signal() operation

signal(S) {

S++;

}- Counting semaphore – integer value can range over an unrestricted domain

- Binary semaphore – integer value can range only between 0 and 1

- Same as a mutex lock

- Can solve various synchronization problems

- Consider P1 and P2 that require S1 to happen before S2

- Create a semaphore “synch” initialized to 0

P1:

S1;

signal(synch);

P2:

wait(synch);

S2;- Provides mutual exclusion

Semaphore mutex; //initialized to 1

do {

wait (mutex);

// Critical Section

signal (mutex);

// remainder section

} while (true);- Must guarantee that no two processes can execute the wait() and signal()on the same semaphore at the same time

- Thus, the implementation becomes the critical section problem

where the wait and signal code are placed in the critical

section

- Using busy waiting in critical section implementation

- Implementation code is short; Little busy waiting if critical section rarely occupied

- Problem – Applications may spend lots of time in critical sections and therefore this is not a good solution

- Using busy waiting in critical section implementation

- Busy waiting solution

- While a process is in its critical section, any other process that tries to enter its critical section must loop continuously in the entry code.

- Spinlock semaphore - Process spins while waiting for the lock.

- Problem – Busy waiting wastes CPU cycles.

- Blocking solution: Avoiding busy waiting with two operations

- block suspends the invoking process and put it on a waiting state.

- wakeup(P) resumes the execution of a blocked process P to the ready state (subject to CPU scheduling).

- Define a semaphore as a struct

typedef struct {

int value;

struct process *L;

} semaphore;-

The semaphore has an integer value and a list of waiting processes.

-

Semaphore operations defined as

wait(S):

S.value--;

if (S.value < 0) {

add this process to S.L;

block;

}

signal(S):

S.value++;

if (S.value <= 0) {

remove a process P from S.L;

wakeup(P);

}- Negative value of semaphore:

- Its magnitude gives the number of waiting processes

- The waiting list – implemented as a link field to each PCB

- Each semaphore contains an integer and a pointer to the list of PCBs

- FIFO queue of the waiting processes

- The wait and signal are provided by OS as basic system calls

- Implementation – Mutual exclusive execution of wait and signal operations on the same semaphore:

- Uniprocessor environment:

- Disabling interrupts during the time the wait and signal operations are executing

- Multiprocessor environment:

- Spinlocks

- Uniprocessor environment:

- Example: Implementation in Linux

struct semaphore {

spinlock_t lock;

unsigned int count;

struct list_head wait_list;

};

down()

{

spin_lock_irqsave(&sem->lock, flags);

if (likely(sem->count > 0))

sem->count--;

else

__down(sem);

spin_unlock_irqrestore(&sem->lock, flags);

}

up()

{

spin_lock_irqsave(&sem->lock, flags);

if (likely(list_empty(&sem->wait_list)))

sem->count++;

else

__up(sem);

spin_unlock_irqrestore(&sem->lock, flags);

}

__down(sem)

{

list_add_tail(&waiter.list, &sem->wait_list);

}

__up(sem)

{

list_del(&waiter->list);

}- Semaphores

- a powerful and flexible tool for enforcing mutual exclusion and for coordinating processes.

- Each process must execute wait(mutex) before entering the critical section and signal(mutex) afterward.

- Incorrect use of semaphores can result in timing errors

- When the sequence of wait-signal execution is not valid.

- When wait and signal are scattered throughout a program making it difficulty to see their overall effect on the semaphore.

- Incorrect use of semaphores

- Interchange the order in which the wait and signal occur (common)

- Violate mutual exclusion.

- Replace a signal with wait (self-waiting)

- Cause deadlock.

- Either of signal or wait is missing.

- Both problems.

- Interchange the order in which the wait and signal occur (common)

- Deadlock and starvation are possible

- Deadlock – two or more processes are waiting indefinitely for an

event that can be caused by only one of the waiting processes

- Let S and Q be two semaphores initialized to 1

| P0 | P1 |

|---|---|

| wait(S); | wait(Q); |

| wait(Q); | wait(S); |

| ... | ... |

| signal(S); | signal(Q); |

| signal(Q); | signal(S); |

- Starvation – indefinite blocking

- A process may never be removed from the semaphore queue in which it is suspended

- Happens with LIFO (last-in, first-out) order

- Priority Inversion – Scheduling problem when lower-priority process

holds a lock needed by higher-priority process

- Solved via priority-inheritance protocol

- any processes that are accessing resources needed by a higher-priority process inherit the higher priority until they are done with the resource.

- Implementation

- Pool of n buffers, each capable of holding one item

- Producer adds items to buffer; consumer removes items from buffer

- Shared data

semaphore mutex;

semaphore full;

semaphore empty;

- The mutex provides mutual exclusion for accesses to the buffer pool

- The empty and full count the number of empty and full buffers.

- Initially:

mutex = 1, full = 0, empty = n;

- Code for producer and consumer processes Note: the symmetry between the producer and consumer.

Producer

do {

// …

// produce an item in nextp

// …

wait(empty);

wait(mutex);

// …

// add nextp to buffer

// …

signal(mutex);

signal(full);

} while (1);Consumer

do {

wait(full)

wait(mutex);

// …

// remove an item from buffer to nextc

// …

signal(mutex);

signal(empty);

// …

// consume the item in nextc

// …

} while (1);- Shared data (e.g., a file or record):

- Readers – only read the shared object

- Writers – update (read and write) the shared object

- Problem – if a writer and some other process (either a reader or writer) access the shared data simultaneously

- Two variations of readers-writers problem

- First readers – writers problem:

- No reader will wait unless a writer has already got permission to use the shared object (no reader should wait simply because a writer is waiting)

- Writers may starve.

- Second readers – writers problem:

- Once a writer is ready, that writer performs its write as soon as possible by letting no new readers start reading.

- Readers may starve.

- First readers – writers problem:

- Consider the first readers – writers problem:

- Shared data (e.g., a file or record):

int readcount;semaphore mutex, wrt;readcountkeeps track of how many processes are currently reading the object.mutexensures mutual exclusion whenreadcountis updated.wrtis common to both the reader and writer processes (acts as a mutual exclusion semaphore for the writers).

- Initially:

mutex = 1 /* only one can update readcount */wrt = 1 /* only one writer can be in */readcount = 0 /* number of readers*/

- Shared data (e.g., a file or record):

- Code for writer and reader processes:

Writer

wait(wrt);

//…

//writing is performed

//…

signal(wrt);Reader

wait(mutex);

readcount++;

if (readcount == 1)

wait(wrt);

signal(mutex);

// …

// reading is performed

// …

wait(mutex);

readcount--;

if (readcount == 0)

signal(wrt);

signal(mutex):- Five philosophers are thinking and eating.

- Five chairs, five single chopsticks and one rice bowl

- When a philosopher gets hungry, he or

she tries to pick up the two chopsticks that

are closest.

- A philosopher can pick up only one chopstick at a time.

- When a philosopher has two chopsticks,

she eats.

- Release chopsticks after finishing eating.

- Dinning-Philosophers problem:

- How to allocate several resources among several processes to have concurrencycontrol in a deadlock- and starvation-free manner.

- Shared data

- Bowl of rice (data set)

- Semaphore chopstick [5] initialized to 1

- Represent each chopstick by a semaphore.

- Shared data:

semaphore chopstick[5];, initially all elements are 1. Initially all elements are 1. - This guarantees that no two neighbors are eating simultaneously but may create a deadlock

- Each philosopher grabs her left chopstick at the same time

- All elements of semaphore are zero.

Philosopher i:

do {

wait(chopstick[i]);

wait(chopstick[(i+1)%5]);

// …

// eat

// …

signal(chopstick[i]);

signal(chopstick[(i+1)%5]);

// …

// think

// …

} while (TRUE);- Deadlock problem

- If all philosophers pick up their left-hand-side chopsticks, they are running into a dead-lock situation.

- Deadlock handling

- Allow at most 4 philosophers to be sitting simultaneously at the table.

- Allow a philosopher to pick up the chopsticks only if both are available (picking must be done in a critical section.

- Use an asymmetric solution -- an odd-numbered philosopher picks up first the left chopstick and then the right chopstick. Even-numbered philosopher picks up first the right chopstick and then the left chopstick.

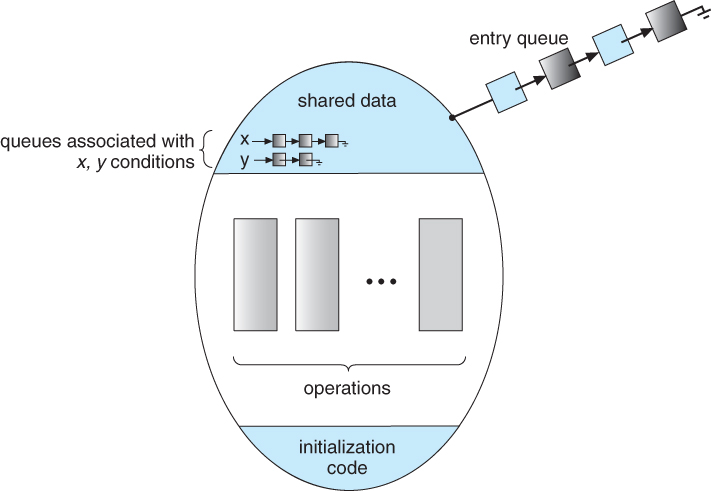

- Concept

- Condition construct

- Dining philosophers example

- Implementation

- Process resumption order

- Resource allocation

- Monitor related issues

- In parallel programming, a monitor is a synchronization construct that allows threads to have both mutual exclusion and the ability to wait (block) for a certain condition to become true. Monitors also have a mechanism for signaling other threads that their condition has been met.

- In essence, a monitor

M(m, c)is a pair of a mutex (lock) objectmand a condition variablec.- A condition variable is basically a container of threads that are waiting on a certain condition.

- Monitors provide a mechanism for threads to temporarily give up exclusive access in order to wait for some condition to be met, before regaining exclusive access and resuming their task.

- For many applications, mutual exclusion is not enough. Threads attempting

an operation may need to wait until some conditions met.

- Busy waiting on condition inside mutex does not work – Mutex will prevent other processes to get in and make the condition TRUE

- The solution – Condition variables

- Logically a queue of threads, on which a thread may wait for some condition to become true.

- While a thread is waiting on a condition variable, the thread is “occupying” the monitor, other threads can enter the monitor.

- So, other threads may signal the condition variable to indicate that the condition is true to resume the thread waiting for the condition.

- High-level synchronization construct that allows the safe sharing of an abstract data type (only) among concurrent processes.

- Software module consisting of procedures, local data variables, and initialization code.

- Only one process may be active within the monitor at a time

- Implemented in different programming languages as well as a program library

- Allows one to put monitor locks on any object.

- Not powerful enough to model some synchronization schemes

monitor monitor-name

{

// shared variable declarations

procedure body P1 (…) {

// ...

}

procedure body P2 (…) {

// ...

}

procedure body Pn (…) {

// ...

}

initialization code (...) {

// ...

}

}- Data define the state of an instance of the monitor type

- Bodies of procedures or functions that implement operations on the type

- Only one process at a time can be active within the monitor.

Monitors with Condition Construct

- To allow a process to wait for the monitor, a condition variable must be declared, as

condition x; - Condition variable can only be used with

waitandsignal.- The operation

x.wait();means that the process invoking this operation is suspended until another process invokesx.signal(); - The

x.signaloperation resumes exactly one suspended process. If no process is suspended, then thesignaloperation has no effect.

- The operation

- Variables

// The waiting list of threads that have never entered the

// monitor before

semaphore mutex; // (initially = 1)

// The waiting list of threads

// that have entered the

// monitor before

semaphore next; // (initially = 0)

int next_count = 0; // # of processes suspended on next- Each procedure F will be replaced by

// Before entering the monitor

// (for accessing shared data)

wait(mutex);

// …

// body of F;

// …

if (next_count > 0){

// Whenever there is a thread waiting on next, reactivate it

signal(next);

}

else{

// Otherwise, let a thread that has never entered the monitor to enter

signal(mutex);

}- Mutual exclusion within a monitor is ensured

- For each condition variable x,

semaphore x_sem; // (initially = 0)

int x_count = 0;- Operation

x.wait:

x_count++; // going to wait

if (next_count > 0) // if a thread is waiting on next list

signal(next); // let it in

else // otherwise

signal(mutex); // let a new thread in

wait(x_sem); // wait on condition list

x_count--; // leave the condition list- Operation

x.signal:

if (x_count > 0) {

next_count++; // to be added back to next queue

signal(x_sem); // condition is me, wake a thread waiting on x_sem

wait(next); // wait on next list

next_count--;

} else {

// do nothing

}- A philosopher is allowed to pick up her chopsticks only if both of them are available – a deadlock-free solution.

monitor DiningPhilosophers

{

enum { THINKING; HUNGRY, EATING} state [5] ;

condition self [5];

void pickup (int i);

void putdown (int i);

void test (int i);

initialization_code() {

for (int i = 0; i < 5; i++)

state[i] = THINKING;

}

void pickup (int i) {

state[i] = HUNGRY; // "i want to eat"

test(i);

if (state[i] != EATING) self[i].wait(); // cannot eat now

}

void putdown (int i) {

state[i] = THINKING;

// test left and right neighbors

test((i + 4) % 5);

test((i + 1) % 5); // "tell neighbors i'm done"

}

void test (int i) {

if ((state[(i + 4) % 5] != EATING) && // left neighbor not eating

(state[i] == HUNGRY) && // right neighbor not eating

(state[(i + 1) % 5] != EATING) ) {

state[i] = EATING;

self[i].signal (); // unsuspend the philosopher

}

}

}- Sequence of the operations invoked by philosopher i:

dp.pickup(i);

// ...

// eat

// ...

dp.putdown(i);- Condition Variables Choices

- If process P invokes

x.signal(), and process Q is suspended inx.wait(), what should happen next?- Q and P cannot execute in parallel. If Q is resumed, then P must wait

- Options include

- Signal and wait

- P waits until Q either leaves the monitor or it waits for another condition

- Signal and continue

- Q waits until P either leaves the monitor or it waits for another condition

- Both have pros and cons--language implementer can decide

- Monitors implemented in Concurrent Pascal compromise

- P executing signal immediately leaves the monitor, Q is resumed

- Implemented in other languages including Mesa, C#, Java

- Signal and wait

- If process P invokes

- Which of the suspended processes should be resumed next:

- FCFS ordering – process waiting the longest is resumed first

- Conditional-wait construct:

x.wait(c);c– integer expression evaluated when the wait operation is executed.- value of

c(a priority number) stored with the name of the process that is suspended. - when

x.signalis executed, process with the smallest associated priority number is resumed next.

- Solaris

- Implements a variety of locks to support multitasking, multithreading (including real-time threads), and multiprocessing

- Uses adaptive mutexes for efficiency when protecting data from short code segments

- Starts as a standard semaphore spin-lock

- If lock held, and by a thread running on another CPU, spins

- If lock held by non-run-state thread, block and sleep waiting for signal of lock being released

- Uses condition variables for long code sections

- Uses readers-writers locks when longer sections of code need access to data in a mostly read-only manner

- Uses turnstiles to order the list of threads waiting to acquire either an adaptive mutex or reader-writer lock

- Turnstiles are per-lock-holding-thread, not per-object

- Priority-inheritance per-turnstile gives the running thread the highest of the priorities of the threads in its turnstile

- Windows XP

- Uses interrupt masks to protect access to global resources on uniprocessor systems

- Uses spinlocks on multiprocessor systems

- Spinlocking-thread will never be preempted

- Also provides dispatcher objects user-land which may act

mutexes, semaphores, events, and timers

- Events

- An event acts much like a condition variable

- Timers notify one or more thread when time expired

- Dispatcher objects either signaled-state (object available) or non-signaled state (thread will block)

- Events

- Linux

- Prior to kernel Version 2.6, disables interrupts to implement short critical sections

- Version 2.6 and later, fully preemptive

- Provides

- Semaphores

- atomic integers

- spinlocks

- reader-writer versions of both

- On single-CPU system, spinlocks replaced by enabling and disabling kernel preemption

- Pthreads

- Pthreads API is OS-independent

- Provides:

- mutex locks

- condition variables

- Non-portable extensions include:

- read-write locks

- spin locks

- System model

- Log-based recovery

- Checkpoints

- Concurrent atomic transactions

- Volatile storage

- information stored here does not survive system crashes

- Example: main memory, cache

- Nonvolatile storage

- Information usually survives crashes

- Example: disk, tape, flash SSD

- Stable storage

- Information never lost (never say never?)

- Not actually possible, so approximated via replication or RAID to devices with independent failure modes

Goal is to assure transaction atomicity where failures cause loss of information on volatile storage

- Assures that operations happen as a single logical unit of work, in its

entirety, or not at all

- Related to field of database systems

- Challenge is assuring atomicity despite computer system failures

- Transaction - collection of instructions or operations that performs single logical function

- Transaction is series of read and write operations

- Terminated by commit (transaction successful) or abort (transaction failed) operation

- Aborted transaction must be rolled back to undo any changes it performed

- Here we are concerned with changes to stable storage (Disk)

- Record to stable storage information about all modifications by a transaction

- Most common is write-ahead logging

- Log on stable storage, each log record describes single transaction write operation, including

- Transaction name

- Data item name

- Old value

- New value

- written to log when transaction Ti starts

- written when Ti commits

- Log on stable storage, each log record describes single transaction write operation, including

- Log entry must reach stable storage before operation on data occurs

- Recovery Algorithm

- Using the log, system can handle any volatile memory errors

Undo(Ti)restores value of all data updated by TiRedo(Ti)sets values of all data in transaction Ti to new values

Undo(Ti)andredo(Ti)must be idempotent- Multiple executions must have the same result as one execution

- If system fails, restore state of all updated data via log

- If log contains without

undo(Ti)

- If log contains and

redo(Ti)

- If log contains without

- Using the log, system can handle any volatile memory errors

- Log could become long, and recovery could take long

- Checkpoints shorten log and recovery time.

- Checkpoint scheme:

- Output all log records currently in volatile storage to stable storage

- Output all modified data from volatile to stable storage

- Output a log record to the log on stable storage

- Now recovery only includes Ti, such that Ti started executing before the most recent checkpoint, and all transactions after Ti. All other transactions already on stable storage

- Transactions may arrive in parallel

- Must be equivalent to serial execution – serializability

- Could perform all transactions in critical section

- Inefficient, too restrictive

- Concurrency-control algorithms provide serializability

- Consider two data items A and B

- Consider Transactions T0 and T1

- Execute T0, T1 atomically

- Execution sequence called schedule

- Atomically executed transaction order called serial schedule

- For N transactions, there are N! valid serial schedules

| T0 | T1 |

|---|---|

| read(A) | |

| write(A) | |

| read(B) | |

| write(B) | |

| read(A) | |

| write(A) | |

| read(B) | |

| write(B) |

Schedule 1: T0 then T1

- Nonserial schedule allows overlappedexecute

- Resulting execution not necessarily incorrect

- Consider schedule S, operations Oi, Oj

- Conflict if access same data item, with at least one write (R-R has not conflict)

- If Oi, Oj consecutive and operations of different transactions & Oi and Oj don’t conflict

- Then S’ with swapped order Oj, Oi equivalent to S

- If S can become S’ via swapping nonconflicting operations

- S is conflict serializable

| T0 | T1 |

|---|---|

| read(A) | |

| write(A) | |

| read(A) | |

| write(A) | |

| read(B) | |

| write(B) | |

| read(B) | |

| write(B) |

Schedule 2: Concurrent Serializable Schedule

- Ensure serializability by associating lock with each data item

- Follow locking protocol for access control

- Locks

- Shared

- Ti has shared-mode lock(S) on item Q, Ti can read Q but not write Q

- Exclusive

- Ti has exclusive-mode lock(X) on Q, Ti can read and write Q

- Shared

- Require every transaction on item Q acquire appropriate lock

- If lock already held, new request may have to wait

- Similar to readers-writers algorithm

- Generally ensures conflict serializability

- Each transaction issues lock and unlock requests in two phases

- Growing – obtaining locks

- Shrinking – releasing locks

- Ensure conflict serializability.

- Does not prevent deadlock

- Select order among transactions in advance – timestamp-ordering

- Transaction Ti associated with timestamp TS(Ti) before Ti starts

- TS(Ti) < TS(Tj) if Ti entered system before Tj

- TS can be generated from system clock or as logical counter incremented at each entry of transaction

- Timestamps determine serializability order

- If TS(Ti) < TS(Tj), system must ensure produced schedule equivalent to serial schedule where Ti appears before Tj

- Data item Q gets two timestamps

- W-timestamp(Q) – largest timestamp of any transaction that executed write(Q) successfully

- R-timestamp(Q) – largest timestamp of successful read(Q)

- Updated whenever read(Q) or write(Q) executed

- Timestamp-ordering protocol assures any conflicting read and write executed in timestamp order

- Suppose Ti executes read(Q)

- If TS(Ti) < W-timestamp(Q), Ti needs to read value of Q that was already overwritten

- read operation rejected and Ti rolled back

- If TS(Ti) ≥ W-timestamp(Q)

- read executed, R-timestamp(Q) set tomax(R-timestamp(Q), TS(Ti))

- If TS(Ti) < W-timestamp(Q), Ti needs to read value of Q that was already overwritten

| T2 | T3 |

|---|---|

| read(B) | |

| read(B) | |

| write(B) | |

| read(A) | |

| read(A) | |

| write(A) |

Schedule Possible Under Timestamp Protocol

- Suppose Ti executes write(Q)

- If TS(Ti) < R-timestamp(Q), value Q produced by Ti was needed previously and Ti assumed it would ever be produced

- Write operation rejected, Ti rolled back

- If TS(Ti) < W-tiimestamp(Q), Ti attempting to write obsolete value of Q

- Write operation rejected and Ti rolled back

- Otherwise, write executed

- If TS(Ti) < R-timestamp(Q), value Q produced by Ti was needed previously and Ti assumed it would ever be produced

- Any rolled back transaction Ti is assigned new timestamp and restarted

- Algorithm ensures conflict serializability and freedom from deadlock

| T2 | T3 |

|---|---|

| read(B) | |

| read(B) | |

| write(B) | |

| read(A) | |

| read(A) | |

| write(A) |

Schedule Possible Under Timestamp Protocol

- Synchronization problem arises when cooperating processes share data.

- Critical section problem

- How to ensure that a critical section code is executed by one process at a time-– mutual exclusion to be enforced.

- CS solutions

- Software support with users’ codes

- Bakery algorithm for n-process CS problem

- Hardware support for atomic execution

- Semaphores with OS support

- A semaphore is accessed only through wait and signal operations

- Software support with users’ codes

- Classic synchronization problems

- Bounded-buffer problem

- Readers-writers problem

- Dinning-philosophers problem

- Higher-level synchronization constructs: Monitors.

- Atomic transactions

- Ensure atomicity despite system failures.