-

Notifications

You must be signed in to change notification settings - Fork 95

GVTg New Architecture Introduction Update

INTEL GVT-G IN UPSTREAM LINUX KERNEL – ZHI WANG

To involve more developing efforts from the community all over the world and rapidly extend the influence of the cutting-edge Intel graphics virtualization technology aka Intel GVT-g [1], code upstream became one of the most critical tasks of Intel GVT-g team in 2016.

Several rounds of design discussions and talks with Intel i915 team were started before the whole process of upstream began and finally a clear direction floated up: GVT-g would become intoa sub-module of i915. To achieve the new direction, enormous architectural changes would happen on existing GVT-g production code tree:

- Virtual device model will only serve VMs. Host i915 driver will access hardware resource directly.

- Virtual device model will request VM resources from host driver

- HW interrupts will not go to virtual device model

- Virtual device model workload submission path will be a client of host workload submission system

- The MMIO emulation in device model will not touch HW registers[2]

- Drop ring buffer mode support in virtual device model, which means the upstream implementation will not support Gen7 or previous GEN graphics.

Several layers were re-designed and re-factored

- Introduce VFIO MDEV based vGPU lifecycle management

- Introduce new vGPU resource management based on host graphics resource mgmt

- Introduce full GPU interrupt virtualization framework

- Introduce new vGPU workload scheduling/dispatching framework

- Introduce new virtual HW submission layer [3]

- Introduce full display virtualization with dmabuf-based guest framebuffer export support

Particularly Intel GVT-g upstream involves many changes over different components in Linux kernel and userspace emulators. It is known that different vendors will have different requirements which results to highly different design and implementation in the end. To achieve a generic, fully standardized framework, many significant discussions were driven by Intel architects/developers, maintainers from Redhat and also developers from NVIDIA. A new framework name MDEV (Mediated Device) framework [4], together developed by NVIDIA/Redhat/Intel, under VFIO (Virtual Function I/O) was finally well designed and merged.

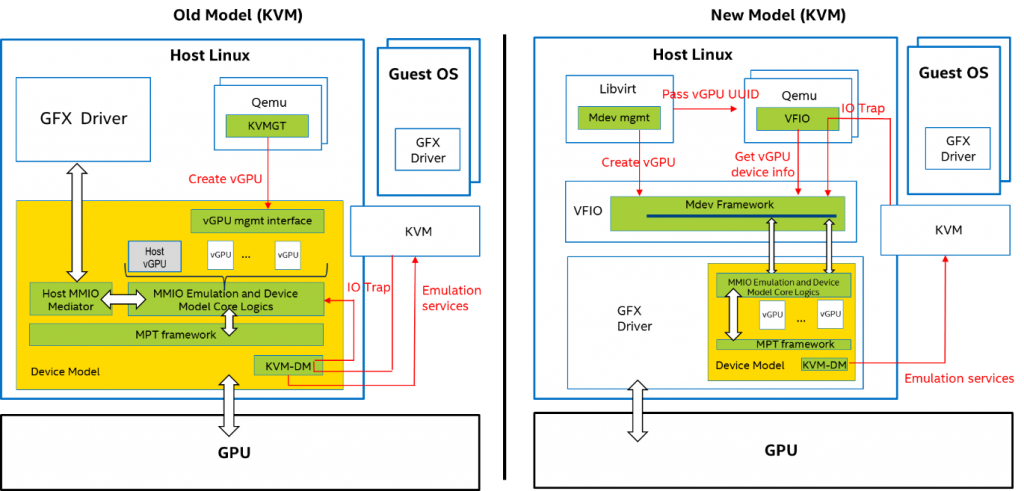

Figure 1. Old Architecture vs New Architecture

In the new architecture, the lifecycle of vGPU is managed by VFIO MDEV framework which is a generic framework for all mediate devices management. The mediated device driver will attach itself with Linux device and VFIO framework together. In Linux device sysfs node, a file named “mdev_supported_types” which shows all supported types of mediated devices would appear after the mediated device driver is successfully loaded. The user is able to directly create a mediate device by echoing an UUID to “/sys/…/mdev_supported_types/[type]/create”. A UUID then would appear in the same folder with “mdev_supported_types” which represents a mediated device. To combine this mediate device with QEMU [4] which is usually the userspace device emulators, user could pass the UUID of mediate device to QEMU. The user is able to destroy the mediate device by echoing 1 to /sys/…/$UUID/destroy.

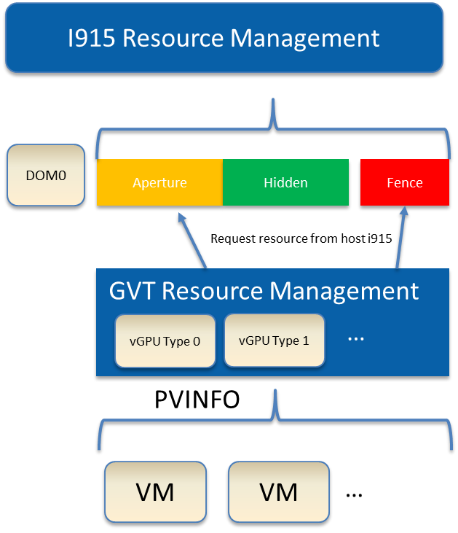

As virtual device model will be a sub-module of host i915 driver, it will request vGPU resource from host i915 driver as well. Mostly an Intel vGPU needs 3 types of vGPU resources [6]:

- Aperture

- Hidden graphics memory space

- Fence registers

The vGPU resource management will require those resource specified by vGPU type in “mdev_supported_types” from host i915 driver

Figure 2. vGPU Resource Management in New Architecture

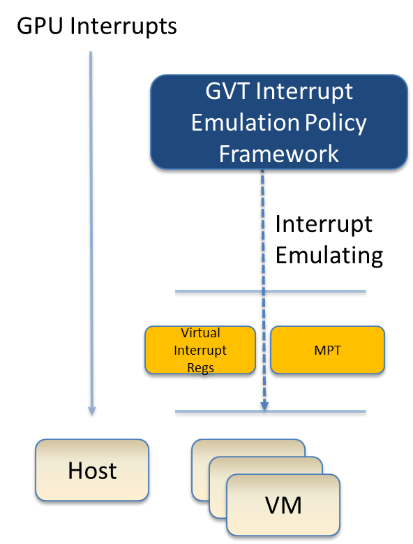

The virtual device model will not touch the HW interrupt in the new architecture, as it’s hard to combine the interrupt controlling logics between virtual device model and host driver. To prevent architectural changes in host driver, host GPU interrupt will not go to virtual device model and the virtual device model has to handle the GPU interrupt virtualization by itself. Virtual GPU interrupts are categorized into three types:

- Periodic GPU interrupts are emulated by timers. E.g. VBLANK interrupt

- Event-based GPU interrupts are emulated by emulation logics. E.g. AUX Channel Interrupt

- GPU command interrupts are emulated by command parser and workload dispatcher. The command parser will mark out which GPU command interrupts will be generated during the command execution and the workload dispatcher will inject those interrupts into the VM after the workload is finished

Figure 3. Full GPU Interrupt Virtualization

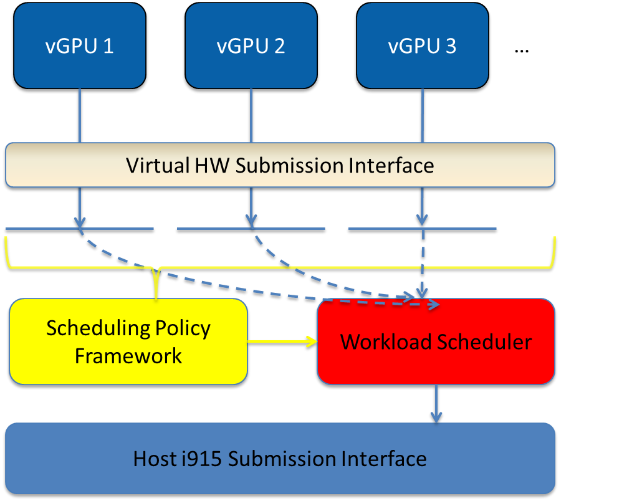

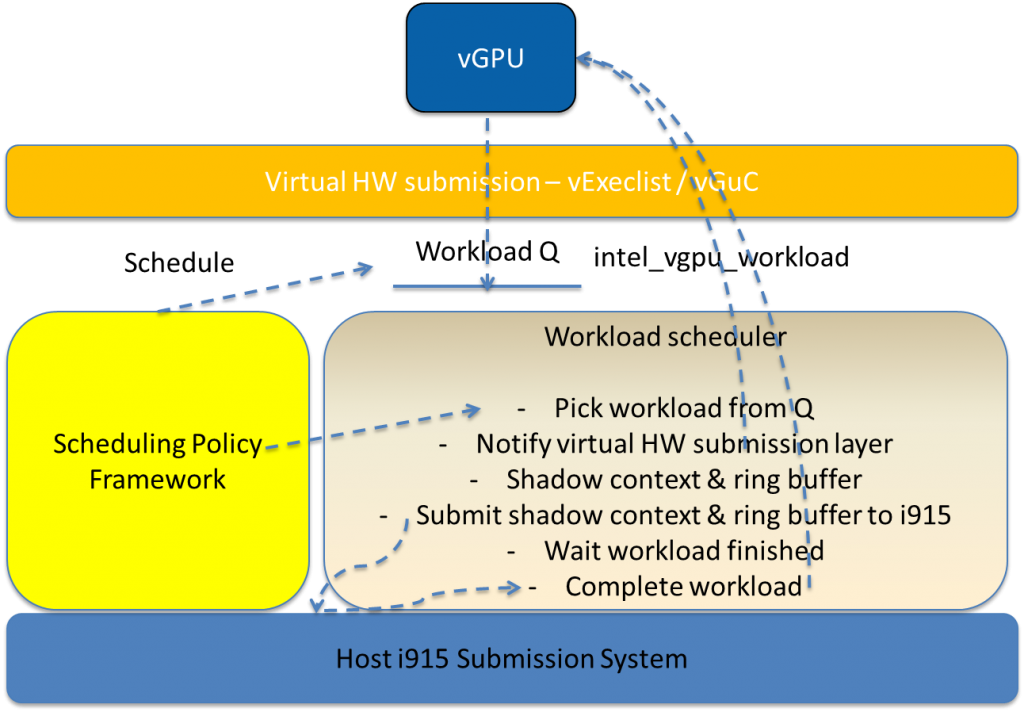

First, let’s take a look on the big picture of vGPU workload scheduling and dispatching.

Figure 4. vGPU Workload Scheduling and Dispatching

Software submits the workload by the legacy ring buffer mode on Intel Processor Graphics before Broadwell, which is not supported by GVT-g virtual device model nowadays. A new HW submission interface named “Execlist” is introduced since Broadwell. With the new HW submission interface, Software could achieve better programmability and easy context management. In Intel GVT-g, vGPU submits the workload through the virtual HW submission interface. Each workload in the submission will be represented as an “intel_vgpu_workload” data structure, a vGPU workload, which will be put on a per-vGPU and per-engine workload queue later after being performed some basic checks and verifications.

The scheduling policy framework is the core of vGPU workload scheduling system. It controls all the scheduling actions and provides the developer a generic framework for easily developing scheduling policies. The scheduling policy framework controls the work scheduling process while it doesn’t care about how the workload is dispatched or completed. All the detailed workload dispatching is hidden in workload scheduler, which is the actual executer of a vGPU workload.

The workload scheduler is actually handling everything about one vGPU workload. Each actual HW ring is backed by one workload scheduler kernel thread. The workload scheduler picks the workload from currently vGPU workload queue then talks to the virtual HW submission interface to emulate the “schedule-in” status for the vGPU and it will perform context shadow, command buffer scan and shadow, PPGTT page table pin/unpin/ out-of-sync before submitting this workload to host i915 driver. When the vGPU workload is completed, the workload scheduler will ask the virtual HW submission interface to emulate the “schedule-out” status for the vGPU. VM graphics driver would know a GPU workload is finished.

Figure 5. Dispatching a vGPU Workload

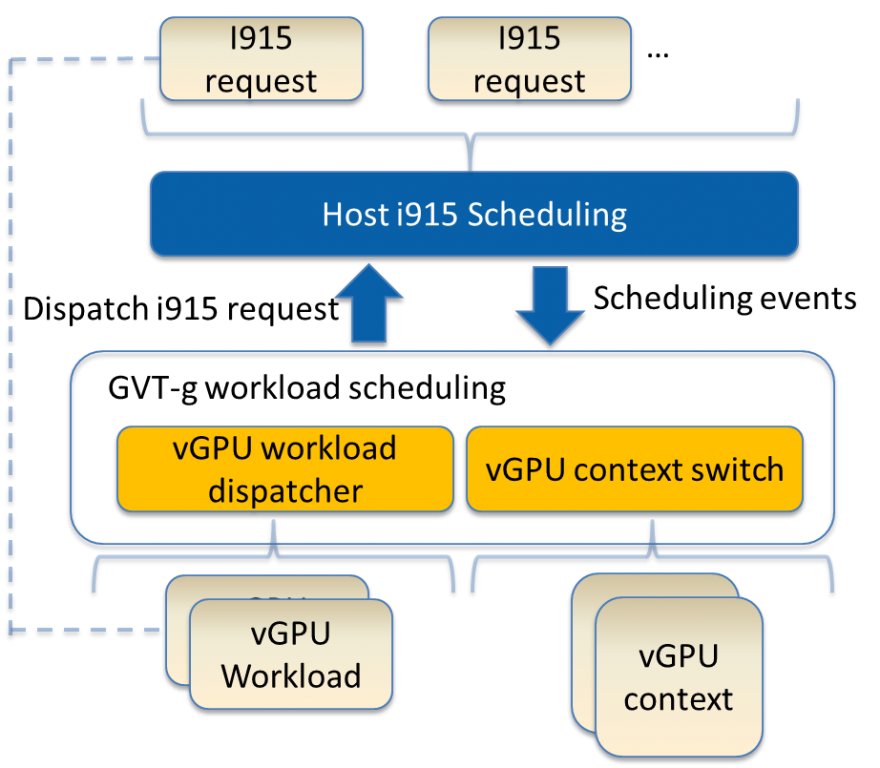

Each vGPU workload is submitted as an i915_gem_request, which is the workload representor in i915 GEM submission system. To get the workload scheduling events from host i915 driver, Intel GVT-g requires an extra context status notification change from host i915 driver. With this notification, virtual device model could know the exact time to shadow context.

Figure 6. Workload Scheduling between Host i915 and Virtual device model

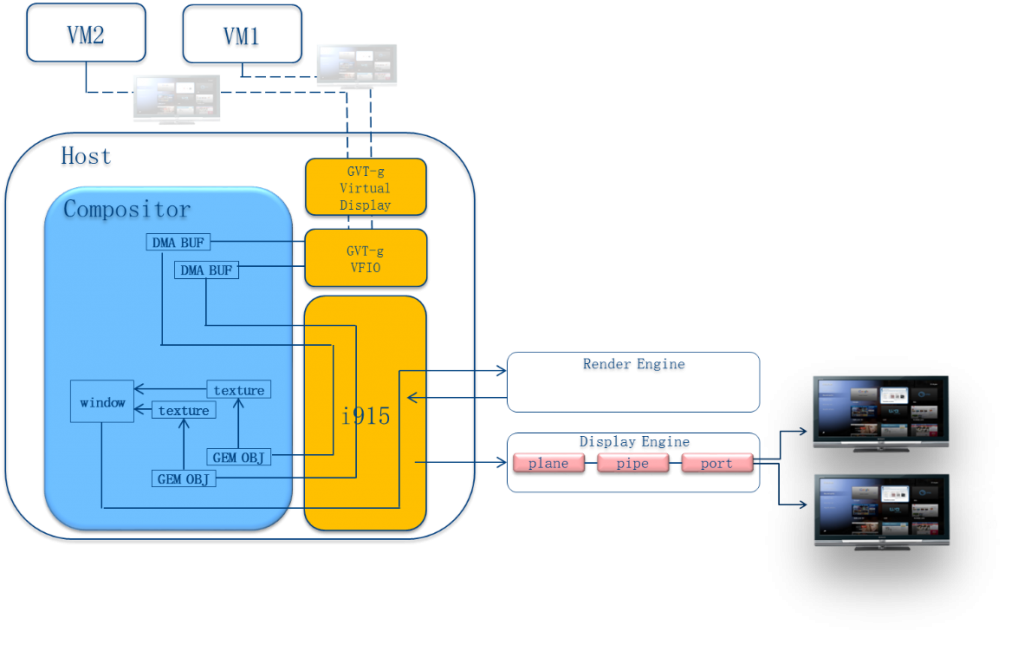

Since the virtual device model is not allowed to touch the HW registers under the new architecture, the old display virtualization has to be dropped, also the concepts in old display virtualization are dropped as well, e.g. foreground/background VM, display owner.

In the new architecture, all kinds of the data related to the display, which are going to be showed to VMs, are virtualized, like EDID, VBT. User is able to custom the virtual display via VFIO MDEV framework in future. To get the screen content of VMs, a new dmabuf based VM framebuffer export mechanism is introduced.

Figure 7. Dmabuf-based VM framebuffer export mechanism

The VM framebuffer will be decoded first and then exported through the generic VFIO MDEV interface as a dma-buf handle. User is able to attach this dma-buf handle with host i915 driver, a new GEM object handle attached with this dma-buf handle is generated. With this GEM object handle, user is able to get an EGL Image object via the MESA EGL extensions. After the rendering, user can directly flip the GEM object into specific DRM CRTC via KMS. This is similar with the previous background/foreground VM in old GVT-g implantation. Also it could filp the render object with the windows system.

The GVT-G upstream development model now follows i915 development model. All the patches will go to gvt-next branch. Particularly patches which fix critical problems will also go to the gvt-fixes branch, then they will be merged into drm-intel-fixes branch and land in the next linux stable release.

Developers should check the developing code from https://github.com/01org/gvt-linux.git and cook patches based on *gvt-staging* branch.

References:

[1] Intel GVT-G is now in RHEL 7.4 as a technical preview feature

[2] To support some features and workaround that host i915 driver hasn't enabled, but still requested by guest, virtual device model will touch some HW registers during vGPU context switch.

[3] Only support vExeclist currently. The framework will support vGuC in new platforms

[4] https://github.com/torvalds/linux/blob/master/Documentation/vfio-mediated-device.txt

[6] https://01.org/linuxgraphics/documentation/hardware-specification-prms