-

Notifications

You must be signed in to change notification settings - Fork 95

Sharing Guest Framebuffer Host

Author: Tina Zhang (tina.zhang@intel.com); Terrence Xu (terrence.xu@intel.com)

Intel® GVT-g has enabled a new mechanism to directly share guest framebuffer with the host side. With this feature, host user space can directly access guest framebuffers through dma-buf interface.

This post aims to introduce the idea behind this feature as well as the sample usages, to help users better understand this mechanism and use it well for their projects and fancy innovations.

Today, thanks to the Intel® GVT-g1 project, multiple vGPUs can be created on Intel® processor graphics for shared GPU virtualization usages. Then, the next question would be how to efficiently deliver guest framebuffer to remote users. In the past, the guest remote protocol solution has been used a lot, which needs a remote protocol server installed in guest image, then users can access the VM’s desktop through remote protocol client applications.

Besides this guest remote protocol solution, there is also a requirement on remoting guest framebuffer through host software stack, when guest remote protocol solution can’t apply (e.g. when considering cost cross different guest OSes, diagnostic purpose, etc.). Host-side rendering provides unified and consistent solution stack.

Intel® GVT-g, as a full GPU virtualization solution, emulates GPU hardware resources including the display. This makes it possible for host to directly get the guest’s framebuffer memory address by decoding guest’s virtual display registers. So, new IOCTL ABIs proposed by this solution can be utilized by application developers to easily access and render guest framebuffer in their projects.

Here are some typical use cases that the host-side rendering solution could apply.

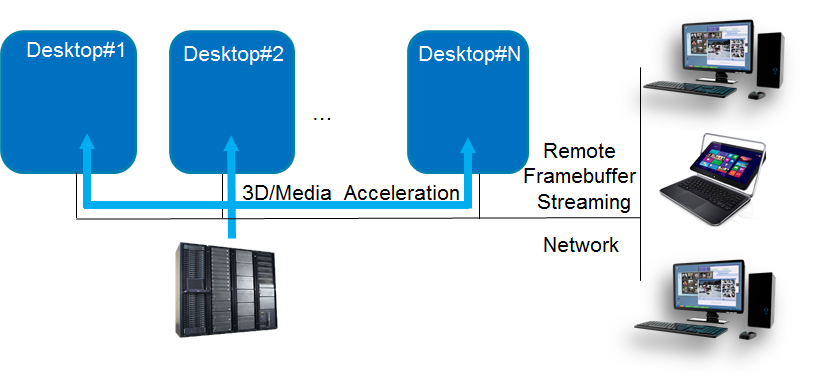

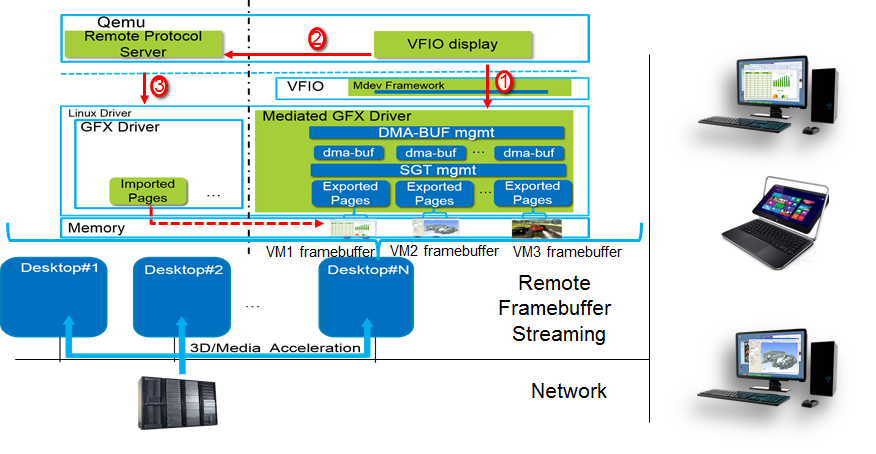

This host-side rendering solution can be adopted in server-side for the remote desktop/cloud gaming usages or diagnostic purpose. With this solution, the host remote display protocol can directly access and stream guest framebuffers to remote clients.

|

Figure 1: Remote desktop/cloud gaming usages3 |

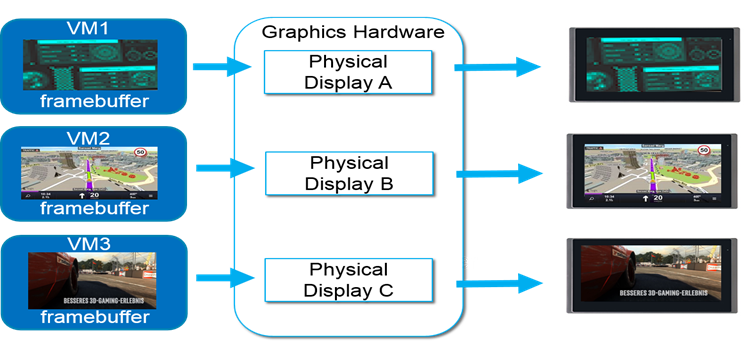

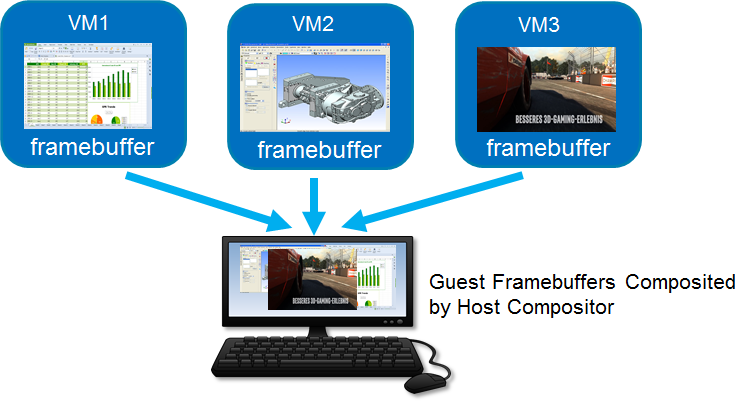

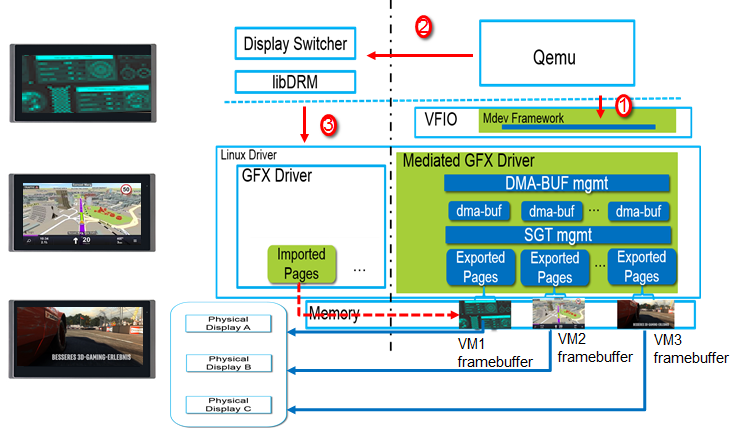

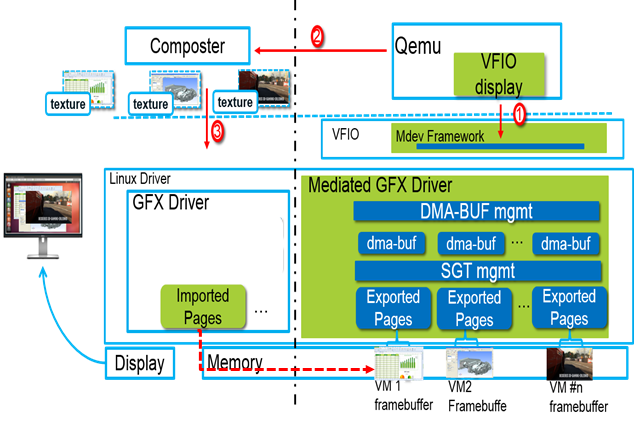

In client virtualization scenarios, host GPU mediator may directly configure guest framebuffer to a local display panel without composition. Meanwhile, another use case would be a host compositor directly map guest’s framebuffer as a surface which is then composited into some fancy effect through OpenGL.

|

Figure 2: Rendering without composition3 |

Figure 3: Rendering with composition3 |

The design aims to export guest framebuffer through a generic interface that user space can utilize, based on the existing Linux graphics stack. In order to achieve this, we choose the solution based on kernel dma-buf subsystem which works as a standard way provided by kernel to share DMA buffers and has been already supported by Linux graphics stacks. Besides, in user space, remote protocol, like SPICE5, has already supported using dma-buf file descriptor to reference a texture to do the local rendering. Multimedia framework like Gstreamer6, also supports streaming video through dma-buf interface. So the dma-buf interface is general enough to play with the existing software stacks.

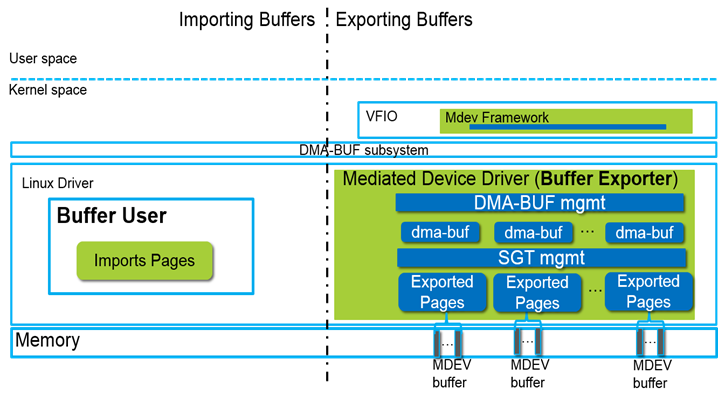

Figure-4 is the high-level architecture picture, which is based on VFIO mediated devices framework7. VFIO is a secure, user space driver framework, which can directly assign the physical I/O devices to VMs. Mediated device framework utilizing VFIO framework, which is used to mediate a physical I/O device into several virtual mediated devices which can be assigned to VMs. As the figure tells, the mechanism mainly works as a buffer exporter who exports the mediated device buffer as a dma-buf object with an installed file descriptor. Buffer users in the host side are working as the buffer importers. The blue components are brought in by the implementation of this mechanism, including the user interface defined in mediated device framework and the device specific implementations in the mediated device driver. Basically, the mediated device driver needs to implement the dma-buf operations for the exposed mediated device’s buffer and manage the SGT (scatter-gather table) which decides about the actual backing storage where this allocation happens.

|

Figure 4: high-level architecture3 |

Two IOCTL ABIs are added for user space accessing vGPU’s framebuffers, based on VFIO mediated devices framework. They are VFIO_DEVICE_QUERY_GFX_PLANE for querying guest current framebuffer information and VFIO_DEVICE_GET_GFX_DMABUF used to return a dma-buf file descriptor of a specific guest framebuffer to user space.

Usage Example:

|

struct vfio_device_gfx_plane_info plane; memset(&probe, 0, sizeof(plane)); plane.argsz = sizeof(plane); /* Probe to see if the mediate vGPU driver supports exposing guest framebuffer as dma-buf */ plane.flags = VFIO_GFX_PLANE_TYPE_PROBE | VFIO_GFX_PLANE_TYPE_DMABUF; if (!ioctl(vdev->vbasedev.fd, VFIO_DEVICE_QUERY_GFX_PLANE, &probe)) /* Doesn’t support exposing buffer as dma-buf */ /* Get guest current framebuffer information including “dmabuf_id” field */ plane.flags = VFIO_GFX_PLANE_TYPE_DMABUF; plane.drm_plane_type = plane_type; ioctl(vdev->vbasedev.fd, VFIO_DEVICE_QUERY_GFX_PLANE, &plane) /* Then check if the framebuffer has the valid contents */ if (!plane.drm_format || !plane.size) /* The contexts of this framebuffer is invalid. */ /* Get the file descriptor of the specific guest framebuffer and use it to…*/ ioctl(vdev->vbasedev.fd, VFIO_DEVICE_GET_GFX_DMABUF, &plane.dmabuf_id); |

With the file descriptor, then let’s see how the sample use cases do to render the guest framebuffers through dma-buf interface.

In server side, user space, e.g. Qemu, can first get the fd of an exposed guest framebuffer, and then sends it to the remote protocol server, e.g. SPICE server. The remote protocol server can directly access the buffer through the dma-buf file descriptor and use dma-buf specific APIs proposed by media framework (e.g. gst_dmabuf_* APIs proposed by GStreamer) to stream it to the remote clients.

|

Figure 5: Remote desktop/cloud gaming usages based on dma-buf3 |

In client scenario, user space Qemu can get the file descriptor of an exposed guest framebuffer, then sends it to a display switcher, over UNIX domain socket. The display switcher is a user space application, which is using libdrm KMS APIs to control the local display panels. So, with the dma-buf file descriptor, the display switcher first needs to get a buffer handle according to the dma-buf file descriptor by using drmPrimeFDToHandle() and then creates a framebuffer object according to the buffer handle by using drmModeAddFB2WithModifiers(). At last, the framebuffer can be shown by invoking drmModeSetCrtc() with the framebuffer as the one of the input arguments.

|

Figure 6: Rendering without composition based on dma-buf3 |

Similarly, for the client composition usage, Qemu gets the file descriptor of an exposed guest framebuffer and sends it to the host composter, where an EGLImage can be created with eglCreateImageKHR(), and later to be bound into a texture by glBindTexture(). At last, the guest’s framebuffer can be rendered as a texture through OpenGL.

|

Figure 7: Rendering with composition based on dma-buf3 |

After 18 times revisions, the kernel part patch-set “Dma-buf support for Intel® GVT-g” finally gets queued for kernel 4.16 merge window. In order to achieve a set of general user space interfaces which can cover both dma-buf interface and region usage from NVIDIA as well as a secure and robust implementation, in the past several months, there were many discussions among maintainers from Red Hat, developers from Intel i915 team and Intel® GVT-g team, and developers from NVIDIA.

In user space, Qemu, as the current main user, has patches designed and implemented by Gerd Hoffmann who is Qemu graphics maintainer from Red Hat, to use the proposed user interfaces to access and render the guest framebuffer. The main idea is to introduce a new property called “xdisplay” to enable or disable the display supported by VFIO mediated based vGPU devices. With “xdisplay=on” , the VFIO mediated based vGPU can have a Qemu graphics console, which has the callbacks for being invoked by kinds of Qemu UIs to return the dma-buf file descriptors of the exposed guest framebuffers. At the time of this post, the Qemu side is work-in-process. The local display using VFIO mediated vGPU, can work through GTK UI and SPICE local. Meanwhile the remote display can be tried through SPICE or VNC with the help of “egl-headless” which is implemented by Qemu UI to copy the content from a guest framebuffer back into a classic Qemu display surface so that it can be leveraged by all kinds of Qemu UIs.

Please refer to “Intel® GVT-g Setup Guide”2 to finish all the basic environment setting. Since the user space Qemu UI part is work-in-process, the below referenced boot script is based on the work at the time of this post. You can always refer to “Intel® GVT-g Setup Guide”2 which does the real-time updates.

In kernel side, “Dma-buf support for Intel® GVT-g” patches are in the staging branch8. For the Qemu side, currently, the following branch is used to track Qemu UI work-in-process branch4.

|

git clone https://github.com/intel/Igvtg-qemu git checkout qa/dma_buf ./configure —prefix=/usr —enable-kvm —disable-xen —enable-debug-info —enable-debug —enable-sdl —enable-vhost-net —enable-spice —disable-debug-tcg —target-list=x86_64-softmmu —enable-opengl make -j8 make install |

The main usages can be divided into: remote display and local display. Although the work of supporting VFIO mediated vGPU’s display is still work-in-process, here are some VM booting scripts which can be used by users to try the latest work.

1) SPICE:

This can be tried with adding the display option "-display egl-headless -spice disable-ticketing,port=<num>” and “x-display=on,x-igd-opregion=on”9 as part of vgpu properties (“-device vfio-pci,sysfsdev=xxx,..”), into VM booting command.

Here is an example of VM booting script:

|

/usr/bin/qemu-system-x86_64 \ -m 2048 -smp 2 -M pc \ -name local-display-vm \ -hda /home/img/linux.qcow2 \ -bios /usr/bin/bios.bin -enable-kvm \ -net nic,macaddr=52:54:00:10:00:1A -net tap,script=/etc/qemu-ifup \ -vga none\ -display egl-headless -spice disable-ticketing,port=5910 \ -k en-us \ -machine kernel_irqchip=on \ -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 \ -cpu host -usb -usbdevice tablet \ -device vfio-pci,sysfsdev=/sys/bus/pci/devices/0000:00:02.0/59c57b6c-13ee-4055-846e-e4d3dc55d389,x-display=on,x-igd-opregion=on |

After the VM finishes booting, user can use “spicy” which is a SPICE client test application, to connect to the VM’s display and test it.

The spicy typical usage is:

Spicy -h=<host ip> -p=<port>

2) VNC

Same as SPICE, but this time needs “-vnc :<num>” instead of all the SPICE options. And here is the referenced VM booting script:

|

/usr/bin/qemu-system-x86_64 \ -m 2048 -smp 2 -M pc \ -name local-display-vm \ -hda /home/img/linux.qcow2 \ -bios /usr/bin/bios.bin -enable-kvm \ -net nic,macaddr=52:54:00:10:00:1A -net tap,script=/etc/qemu-ifup \ -vga none\ -display egl-headless \ -vnc :1 \ -k en-us \ -machine kernel_irqchip=on \ -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 \ -cpu host -usb -usbdevice tablet \ -device vfio-pci,sysfsdev=/sys/bus/pci/devices/0000:00:02.0/59c57b6c-13ee-4055-846e-e4d3dc55d389,x-display=on,x-igd-opregion=on |

vncviewer can be used as one of the VNC client to access the VM’s display.

3) Libvirt

You can also create a VM with VFIO mediated vGPU’s display through virsh. And here is an example:

|

<domain type=‘kvm’ xmlns:qemu=‘http://libvirt.org/schemas/domain/qemu/1.0’> |

1) “GTK” UI

With the display option “-display gtk,gl=on” and “x-display=on,x-igd-opregion=on” as part of vgpu enabled properties, the VM booting command could be like this:

|

/usr/bin/qemu-system-x86_64 \ -m 2048 -smp 2 -M pc \ -name local-display-vm \ -hda /home/img/linux.qcow2 \ -bios /usr/bin/bios.bin -enable-kvm \ -net nic,macaddr=52:54:00:10:00:1A -net tap,script=/etc/qemu-ifup \ -vga none\ -display gtk,gl=on \ -k en-us \ -machine kernel_irqchip=on \ -global PIIX4_PM.disable_s3=1 -global PIIX4_PM.disable_s4=1 \ -cpu host -usb -usbdevice tablet \ -device vfio-pci,sysfsdev=/sys/bus/pci/devices/0000:00:02.0/59c57b6c-13ee-4055-846e-e4d3dc55d389,x-display=on,x-igd-opregion=on

|

2) SPICE for local display

The same properties as SPICE for remote display could be used here, as long as the client

(e.g. spice) is running on the same machine with VMs. Besides, since the client and server are running on the same machine, dma-buf files descriptor can be sent over unix domain socket to get better performance without any texture copy. Hence, the spice properties in VM booting script could be like this: “-spice disable-ticketing,gl=on,unix,addr=/tmp/spice-vgpu”. The “-display egl-headless” could be removed as we don’t need help of “egl-headless” to read the texture(a.k.a guest framebuffer) back.

Here is the referenced usage of spicy:

spicy —uri=spice+unix:///tmp/spice-vgpu

3) Libvirt for local display

Same as Libvirt for remote display. But this time try virt-manager on the same machine with the running VMs.

Thanks a lot to Alex Williamson, Gerd Hoffmann, Joonas Lahtinen, Chris Wilson, Zhenyu Wang, Zhi Wang and Kevin Tian for reviewing and commenting on the “Dma-buf support for Intel® GVT-g“ patch-set. And thanks to Hang Yang, Kevin Tian and Zhenyu Wang for reading and commenting on drafts of this post.

1: Intel® Graphics Virtualization Technology –g (Intel® GVT-g)

2: https://github.com/intel/gvt-linux/wiki/GVTg_Setup_Guide

3: “Generic Buffer Sharing Mechanism for Mediated Devices” at 2017 KVM Forum

4: repo: git://git.kraxel.org/qemu branch: work/intel-vgpu

5: https://www.spice-space.org/

6: https://gstreamer.freedesktop.org/

7: https://www.kernel.org/doc/Documentation/vfio-mediated-device.txt

8: repo: https://github.com/intel/gvt-linux branch: staging

9: “x-display” is changed to “display” as a supported feature enabled through libvirt